This page has been moved to our support documentation website.

See https://docs.vpixx.com/vocal/introduction-to-eye-tracking-with-the-trackpixx3.

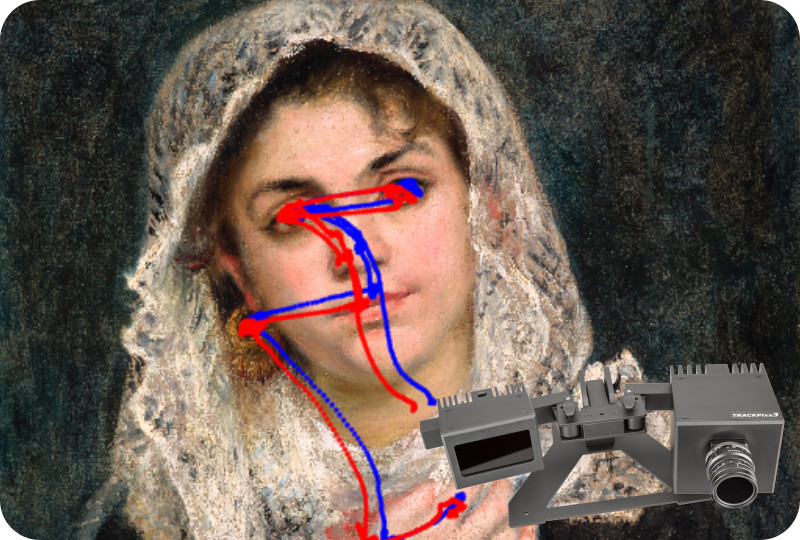

Introduction to Eye Tracking with the TRACKPixx3

This project explores the data output of the TRACKPixx3. Includes code and sample data.

- EyeTracking

- ·

- Guide

- ·

- MATLAB

- ·

- Project

- ·

- Psychtoolbox

- ·

- TRACKPixx3

Contributed by:

Dr. Lindsey Fraser, VPixx Technologies

Date published:

May 6, 2020